Introduction

Artificial Intelligence and Machine Learning are transforming the way capital markets operate. AI & ML have significant impacts on trading, risk management, fraud detection, and overall efficiency. While these technologies offer many benefits, it is important for financial institutions to be aware of the potential risks.

We will talk about different AI & ML business cases in this series of discussions. Today’s post focuses on Risk Management. Further, we limit ourselves to a credit risk model known as Extreme Gradient Boosting (XGBoost) algorithm.

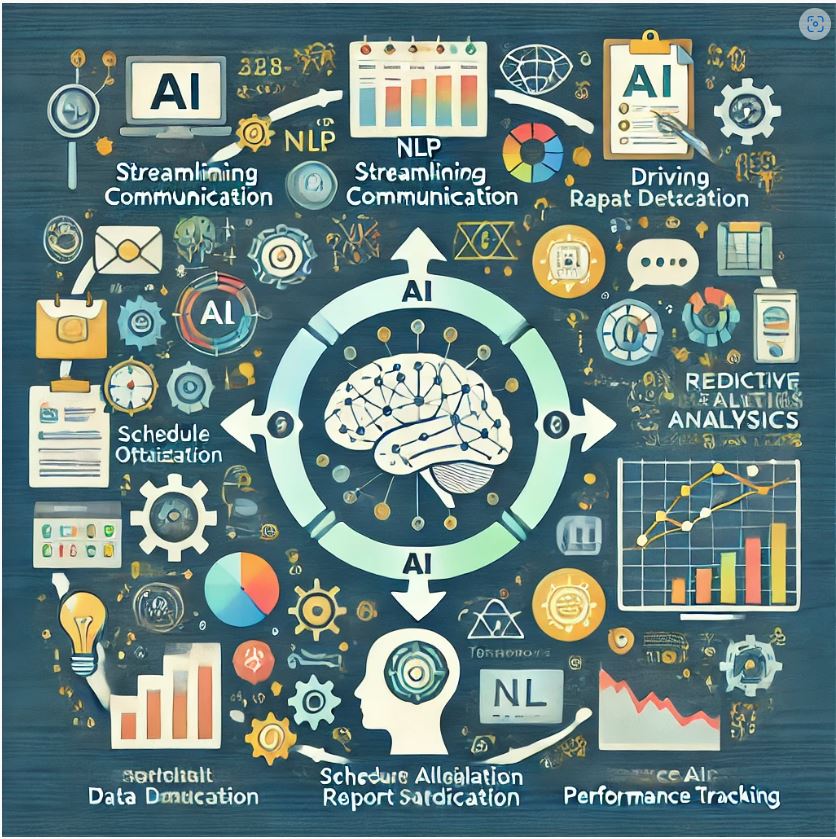

AI & ML in Risk Management

AI and ML in risk management is the use of predictive modeling. Predictive models use historical data and statistical analysis. And in turn identify patterns and trends that can be used to predict future events. This can be applied to a wide range of risks, including credit risk, market risk, and operational risk. Another application of AI and ML in risk management is the use of scenario analysis. AI and ML can also be used to improve stress testing.

Extreme Gradient Boosting (XGBoost) algorithm

XGBoost is a powerful machine learning tool that is used to predict the likelihood of default for a borrower. It uses a combination of decision trees and gradient boosting to create a highly accurate model that can be used to assess credit risk. The key strength of XGBoost is its efficient data structure called a histogram-based algorithm. It allows for faster training times and improved accuracy.

A general pipeline of how XGBoost looks like following:

- Initialize the first decision tree with the entire dataset.

- For each subsequent tree, use the residuals from the previous tree as the target variable.

- Fit the new tree to the residuals and add the predicted values from this tree to the previous predictions.

- Repeat steps 2 and 3 for a fixed number of iterations or until the performance on a hold-out validation set stops improving.

- Combine the predictions from all trees to make a final prediction.

Working Example

Let’s say we have a dataset called “credit_data.csv” which contains information on past credit history, income, employment, and other relevant factors for a set of individuals. The dataset has the following columns:

age: age of the individual

income: annual income of the individual

debt_ratio: ratio of total debt to total assets

past_delinquency: number of past delinquencies

credit_risk: binary variable indicating whether the individual is a high credit risk (1) or low credit risk (0)

Here is a code snippet of how we can use XGBoost to predict credit risk using this dataset:

import xgboost as xgb

import pandas as pd

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score

# load the dataset

data = pd.read_csv("credit_data.csv")

# split the dataset into a training set and a test set

X_train, X_test, y_train, y_test = train_test_split(data[['age', 'income', 'debt_ratio', 'past_delinquency']], data['credit_risk'], test_size=0.2)

# train the XGBoost model

model = xgb.XGBClassifier(max_depth=3, n_estimators=100)

model.fit(X_train, y_train)

# make predictions on the test set

y_pred = model.predict(X_test)

# evaluate the model

accuracy = accuracy_score(y_test, y_pred)

print("Accuracy: %.2f%%" % (accuracy * 100))

In this example, we first load the dataset using pandas, and then split it into a training set and a test set using the train_test_split function from scikit-learn. The training set is used to train the XGBoost model, and the test set is used to evaluate its performance.

Further

The XGBoost model is created and trained using the XGBClassifier class, with the max_depth and n_estimators parameters set to 3 and 100 respectively. These parameters control the maximum depth of the decision trees and the number of trees used in the model.

We then use the trained model to make predictions on the test set, and the accuracy of the predictions is evaluated using the accuracy_score function from scikit-learn.

This is a simple example of how XGBoost can be used to predict credit risk using a specific dataset, but in real-world scenarios, you might need to tune the parameters and evaluate the model with other metrics as well.